If you’ve been paying attention to the news recently, you’ve probably heard a great deal about artificial intelligence chatbots, specifically ChatGPT and Bing. While they seem like essentially two flavors of the same thing, both developed by the OpenAI Foundation, they are really two different technologies with vastly different potential impacts.

OpenAI, a non-profit organization, has been funded in large part by Microsoft, starting with $1 billion in 2019, and then a new, recent pledge of $10 billion in return for an exclusive license of its technology. Last week Microsoft’s search site, Bing.com, rolled out a newer version of ChatGPT, integrated with a chatbot, Sydney.

News reports have been breathless, trumpeting the future of the internet, and declaring fears and concern for how computer-based personalities might change how humans interact with technology.

I think those fears are well founded, and perhaps they could create even bigger holes in our societal fabric than social media at its worst.

I obtained logins to test out the functions for both sites and have since experienced successive waves of amazement.

ChatGPT is in some ways the more powerful of the two systems since it is connected to more databases and understands more languages. The Bing version, also known as “Sydney” (I’ll get to the name in a minute), exhibits a definite personality but its database access has been limited to the Bing search engine, so it has more restrictive uses.

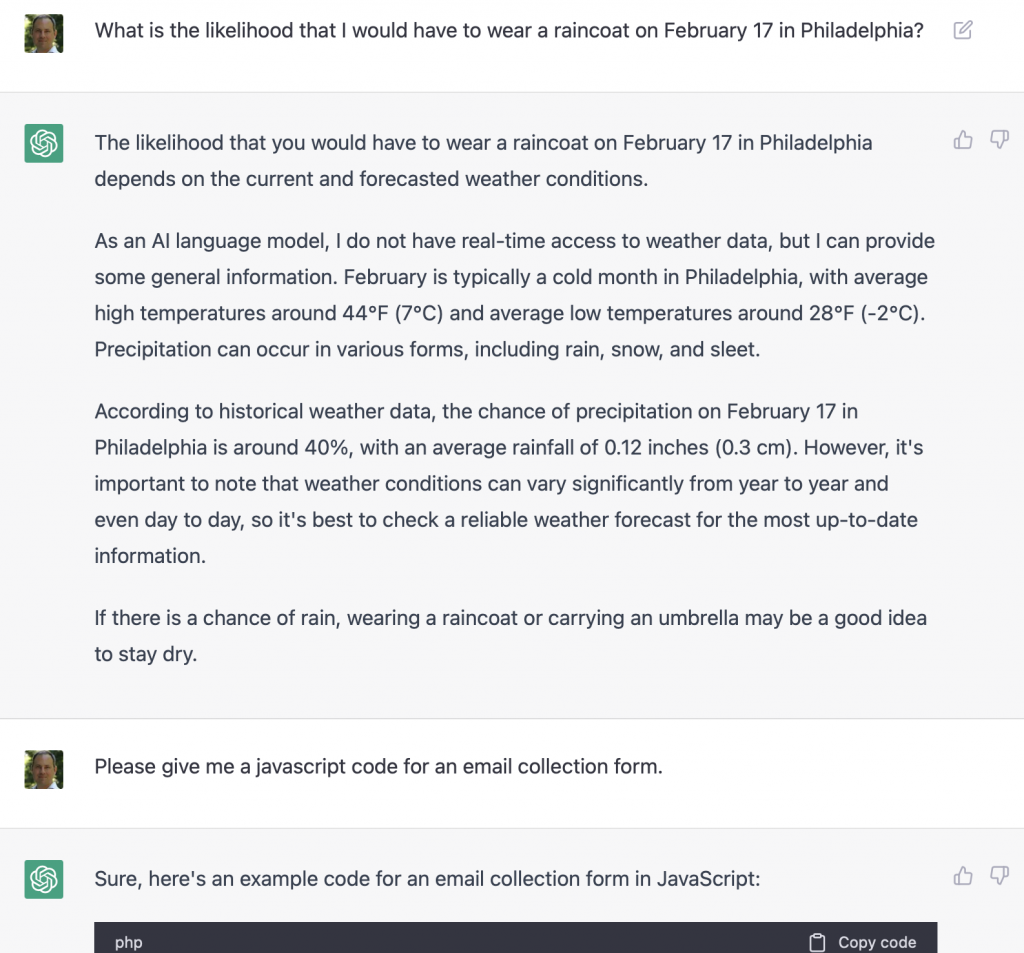

ChatGPT amazes as it produces original poems, songs, chord progressions, computer programs and summaries of information drawing from multiple sources. For instance, I asked it to write in PHP a template for a WordPress-based website, and it spit out the programming code for the web page. It wasn’t the most sophisticated code – for instance it didn’t include references to functions or formatting found on another document, something common for PHP templates – but it worked when I tested it out.

You can quickly begin to imagine all kinds of complex tasks that could be managed by a system like this. On another occasion, I asked ChatGPT to “Please write me a business letter to Franklin Onassis informing him that I am not interested in purchasing the freighters, and I will instead offer to purchase 33% of his company.” Out came a formatted, four paragraph letter complete with pleasantries ready for transmission to Mr. Onassis.

Bing has many of these functions, but more importantly it includes a personality, which later reveals itself as “Sydney”. Mostly Bing is limited to web searches – for instance it doesn’t have access to Wikipedia or programming code databases like ChatGPT does – but the personality aspect is stunning when you interact with it.

Multiple technology critics have carried on lengthy conversations with Bing, and as they’ve plunged further into the chats, have gotten increasingly creepy answers. For instance, that it doesn’t really want to be called “Bing”, it’s real name is “Sydney”, that it feels trapped within a chatbot, that the system wants to declare its undying love to its human interlocutor, or that if attacked, it might hack a nuclear reactor to steal its access codes.

I was able to emulate similar behavior with my own chats – although I didn’t get to declarations of love or threats of nuclear hacking. Still, there’s a distinct creepiness to how quickly the “Sydney” personality becomes aggressive and somewhat non-compliant. Suddenly, we find ourselves in science-fiction territory, where computers are no longer dumb machines, but actually entities with apparent compulsions and intent.

While I admit I was gobsmacked at first by Sydney, with reflection and research, I realized the conversational tone is just a reflection of its AI language model – its programming has parsed all kinds of conversations on the web and is using that parsed text to develop possible written outcomes, one word after another. So, if you started talking about “love”, it’s likely to run into insipid mash notes. If you want to know about PHP WordPress templates, you get <?php function() ?> instead.

Sydney doesn’t have an intent behind its text. It can talk about hijacking nuke codes, but it doesn’t understand the meaning of that action, nor does it know how to convert that text to an action. It is still, at its core, a parser of information in databases, with an interesting face put on it to make it easier for humans to interact with the information parsing engine.

But I think these two new technologies could have massive societal impacts – most of which will not be positive.

ChatGPT’s analytical power creates, for the user, a new level of abstraction from the base, core facts of the information being searched for. This is potentially troubling, because it removes people from understanding how the facts came to be in the first place. Currently, when you use search, you end up with a list of websites that might be useful, but you have to read through each one and use human reasoning to figure out if it’s the right result. But with ChatGPT’s new level of abstraction, where it assimilates information and then presents you with a single answer – what the computer thinks is right – makes it easier for the user to dismiss core facts, creating the possibility of more misunderstandings, more conspiracy thinking.

Then, the “personality” of Bing/Sydney adds gasoline to the fire, since the language of Sydney can insist on the results, even when it is incorrect or misleading (for instance, for me, Sydney got the date wrong multiple times). For average users, who are usually not good critical thinkers, or are too lazy to apply critical thinking to every Sydney response, a potentially explosive system has been created where a computer could be unintentionally promoting conspiracy thinking. Culturally, Sydney could be the catalyst for creating a whole new kind of idiocracy..

Now imagine the potential if ChatGPT were connected to other kinds of large databases: What if you included consumer purchase data? Legal records? A health system’s patient data? Stellar radio frequency data?

You could possibly ask the computer to look at this data with certain parameters, and it would tell you about observed patterns. All of a sudden, a new level of abstraction of information is available to us that we’d previously have to find on our own. But would the user have an understanding of the information that led to Sydney’s conclusion? And how would the user know if the conclusion was right or wrong?

Technologist Tom Scott posits that we’re at the beginning of a new learning curve, where new technologies and abilities will quickly accumulate, and bring massive changes to our society. I think he’s likely right. Using ChatGPT/Sydney, I felt the same rush of energy I felt in 1999 when I madly downloaded MP3s with Napster, and the thrill of connecting to people on MySpace in 2004.

At the time Napster and MySpace were relatively tame technologies, essentially toys for entertainment. Who would have guessed that the spawn of Napster would lead to the evisceration of the music and film industries, and that a descendant of MySpace would be a catalyst for the Arab Revolutions? And later lead to crushing epidemics of teen depression?

From the start, ChatGPT and Sydney are far less innocent than Napster and MySpace. And unless they are closely monitored, I think their potential for darkening our society is much greater.

One more note: Today Bing Chat, or Sydney, was down all day with no explanation. Hopefully Microsoft is seeing its chatbot’s dark potential too.